Tornadoes claim hundreds of lives and cause billions of dollars in damages in the United States. But the tornado outbreak across the South on April 27, 2011, was startling, even for veteran forecasters such as Greg Carbin at the National Oceanic and Atmospheric Administration (NOAA) Storm Prediction Center (SPC) in Norman, Okla.

“Through the 24-hour loop here, almost 200 tornadoes had occurred in that period of time and, unfortunately, over 315 fatalities. Primarily Alabama was hit hardest but also fatalities in Tennessee, Georgia, Mississippi, and Virginia for this event,” says Carbin.

As the warning coordination meteorologist at SPC, he would like to see tools that could help predict these killer storms.

“So, that was sobering, you know. Why? Why so many fatalities? Why so much destruction?” asks Carbin.

With support from the National Science Foundation (NSF), computer scientist Amy McGovern at the University of Oklahoma is working to find answers to key questions about tornado formation. Why do tornadoes occur in some storms, but not in others?

“The problem is that if you need to understand the atmosphere, there are a whole lot of variables out there,” says McGovern. “There’s pressure, there’s temperature, there’s the wind vector. And none of the radars, none of the current sensing instruments can get that at the resolution that we really need to fundamentally understand the tornadoes,” she says.

While video from storm chasers and data from Doppler radar can help meteorologists understand some aspects of tornadoes, McGovern uses different, powerful tools: supercomputers, and a technology known as data mining.

“Data mining is finding patterns in very large datasets. Humans do really, really well at finding patterns in small datasets but fail miserably when the datasets get as large as we’re talking about,” she says.

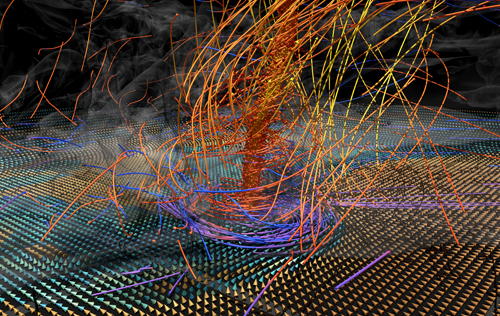

McGovern and her team don’t just study “real” storms. They create supercomputer simulations to analyze how constantly changing storm components interact. And each storm they create may generate a terabyte of data.

“What we’re doing with our simulations is actually being able to sense all of these fundamental variables every 50 to 75 meters,” she explains.

She works with many weather experts, including research meteorologist Rodger Brown, at the National Severe Storms Laboratory. He’s helping to sort out what’s usable information in the simulation, and what’s not needed within the enormous amount of data. He points out some of the “unknowns” on a computer animation.

“There’s some of the bad noise bouncing off the edge of the grid. Somehow, it’s noise being amplified, some sort of gravity waves or something. So we’re going to have to do some more experimentation to find out what the problem is,” says Brown.

Even though there are still many questions to answer with these simulations, McGovern says there’s no hardware right now that could be deployed in a tornado to get so many observations. And even if it did exist, it would likely get destroyed in just about any storm.

Kelvin Droegemeier, professor of meteorology and vice president of research at the University of Oklahoma, has studied severe weather in “Tornado Alley” for many years. He’s excited by the collaboration of meteorology and computer science.

“It’s a game-changer, complete game-changer. Radar leads off basically with detecting something that’s already present; the numerical model gives us the opportunity to actually project it and predict it far in advance,” he says.

“So, instead of warning on a detection based on radar [and] some visual sighting, you’re actually warning based on what a numerical forecast model will tell you. So, imagine a tornado warning being issued before a storm is even present in the sky,” says Droegemeier.

“Amy McGovern has pursued interdisciplinary research early in her career,” says Maria Zemankova, program director for the Information and Intelligent Systems (IIS) Division of the Directorate for Computer and Information Science and Engineering (CISE) at the NSF. “A computer scientist, her CAREER award was actually co-funded by CISE and NSF’s Geosciences Directorate. It’s impressive how she’s been able to forge collaborations among researchers in both disciplines of computer science and the atmospheric sciences to yield actionable results.”

But while the ability to predict tornados so far in advance would be a breakthrough, there’s another variable that is even harder to predict than a dangerous storm: human behavior.

“That’s a very interesting challenge that also brings in the whole social behavioral issues of, how would people react. Would they kind of dismiss that as, well, there’s not even a storm, I looked outside, the skies are clear?” says Droegemeier.

He notes what meteorology students are learning today is very different from what he learned. And it involves not only collaboration with computer scientists like McGovern, or the electrical engineers building new radars, but other experts as well.

“Learn how to talk to social scientists, learn how to communicate your science outcomes to the Kiwanis Club, to other communities that really need to understand what we’re doing. Because it’s only through that broad understanding, I think, that we can really solve the problem of tornadoes and severe storms and other kinds of hazardous weather,” says Droegemeier.

As a professor of computer science, McGovern says research like this, designed to improve tornado prediction, makes an important point in her classes.

“Computer science can really make a difference to the real world, and I’m trying to bring that to the students,” she says.

McGovern also stressed how critical the level of trust needs to be in working with the forecasters who will be making life-saving warnings to thousands of people.

“I can see it has to be really easy to use, and they have to understand it deeply. They don’t want something that just says, ‘here’s the probability.’ It has to be something that we can print out, draw, use some form to say to the meteorologist, ‘this is exactly what we see is going on,” she says.

She has spent time observing and interacting with forecasters and some of the technologies they use at the Storm Prediction Center–and it’s been an eye-opener.

“It was in the Hazardous Weather Testbed Office, and I got to see them using the different models, and saying, ‘this model says this, this is why we think this is right, this model says this, but we don’t believe the model and this is why.’ So I got to see the humans using the technology and understanding why they like it, and why they don’t, and to me that was pretty darn enlightening!” says McGovern.